A dissertation submitted in partial fulfillment of the requirements

for the degree of Doctor of Philosophy at George Mason University

By

David A. Wheeler

Master of Science

George Mason University, 1994

Bachelor of Science

George Mason University, 1988

Co-Directors: Dr. Daniel A. Menascé and Dr. Ravi Sandhu,

Professors

The Volgenau School of Information Technology & Engineering

Fall Semester 2009

George Mason University

Fairfax, VA

Copyright © 2009 David A. Wheeler

[PDF is available]

[Errata is available]

[Related information is available]

You may use and redistribute this work under the Creative

Commons Attribution-Share Alike (CC-BY-SA) 3.0 United States

License. You are free to Share (to copy, distribute, display,

and perform the work) and to Remix (to make derivative works),

under the following conditions:

- Attribution. You must attribute the work in the manner

specified by the author or licensor (but not in any way that

suggests that they endorse you or your use of the work).

- Share Alike. If you alter, transform, or build upon this work,

you may distribute the resulting work only under the same, similar

or a compatible license.

Alternatively, permission is also granted to copy, distribute

and/or modify this document under the terms of the GNU Free Documentation

License, Version 1.2 or any later version published by the

Free Software Foundation.

As a third alternative, permission is also granted to copy,

distribute and/or modify this document under the terms of the GNU

General Public License (GPL) version 2 or any later version

published by the Free Software

Foundation.

All trademarks, service marks, logos, and company names

mentioned in this work are the property of their respective

owners.

Dedication

This is dedicated to my wife and children, who sacrificed many

days so I could perform this work, to my extended family, and to

the memory of my former mentors Dennis W. Fife and Donald Macleay,

who always believed in me.

Soli Deo gloria—Glory to God alone.

Acknowledgments

I would like to thank my PhD committee members and former

members Dr. Daniel A. Menascé, Dr. Ravi Sandhu, Dr. Paul

Ammann, Dr. Jeff Offutt, Dr. Yutao Zhong, and Dr. David Rine, for

their helpful comments.

The Institute for Defense Analyses (IDA) provided a great deal

of help. Dr. Roger Mason and the Honorable Priscilla Guthrie,

former directors of IDA’s Information Technology and Systems

Division (ITSD), partly supported this work through IDA’s

Central Research Program. Dr. Margaret E. Myers, current IDA ITSD

director, approved its final release. I am very grateful to my IDA

co-workers (alphabetically by last name) Dr. Brian Cohen, Aaron

Hatcher, Dr. Dale Lichtblau, Dr. Reg Meeson, Dr. Clyde Moseberry,

Dr. Clyde Roby, Dr. Ed Schneider, Dr. Marty Stytz, and Dr. Andy

Trice, who had many helpful comments on this dissertation and/or

the previous ACSAC paper. Reg Meeson in particular spent many hours

carefully reviewing the proofs and related materials, and Clyde

Roby carefully reviewed the whole dissertation; I thank them both.

Aaron Hatcher was immensely helpful in working to scale the Diverse

Double-Compiling (DDC) technique up to a real-world application

using GCC. In particular, Aaron helped implement many applications

of DDC that we thought should have worked with GCC, but

didn’t, and then helped to determine why they

didn’t work. These “Edison successes” (which

successfully found out what did not work) were important in

helping to lead to a working application of DDC to GCC.

Many others also helped create this work. The work of Dr. Paul

A. Karger, Dr. Roger R. Schell, and Ken Thompson made the world

aware of a problem that needed solving; without knowing there was a

problem, there would have been no work to solve it. Henry Spencer

posted the first version of this idea that eventually led to this

dissertation (though this dissertation expands on it far beyond the

few sentences that he wrote). Henry Spencer, Eric S. Raymond, and

the anonymous ACSAC reviewers provided helpful comments on the

ACSAC paper. I received many helpful comments and other information

after publication of the ACSAC paper, including comments from

(alphabetically by last name) Bill Alexander, Dr. Steven M.

Bellovin, Terry Bollinger, Ulf Dittmer, Jakub Jelinek, Dr. Paul A.

Karger, Ben Laurie, Mike Lisanke, Thomas Lord, Bruce Schneier,

Brian Snow, Ken Thompson, Dr. Larry Wagoner, and James Walden.

Tawnia Wheeler proofread both the ACSAC paper and this document;

thank you! My thanks to the many developers of the OpenDocument

specification and the OpenOffice.org implementation, who made

developing this document a joy.

Table of Contents

List of Tables

List of Figures

List

of Abbreviations and Symbols

|

-A

|

not A. Equivalent to

|

|

A & B

|

A and B (logical and). Equivalent to

|

|

A | B

|

A or B (logical or). Equivalent to

|

|

A -> B

|

A implies B. Equivalent to  and and

|

|

ACL2

|

A Computational Logic for Applicative Common Lisp

|

|

ACSAC

|

Annual Computer Security Applications Conference

|

|

aka

|

also known as

|

|

all X A

|

for all X, A. Equivalent to

|

|

ANSI

|

American National Standards Institute

|

|

API

|

Application Programmer Interface

|

|

ASCII

|

American Standard Code for Information Interchange

|

|

BIOS

|

Basic input/output system

|

|

BSD

|

Berkeley Software Distribution

|

|

cA or cA

|

Compiler cA, the compiler-under-test executable (see

sA)

|

|

cGP or cGP

|

Compiler cGP, the putative grandparent of

cA and putative parent of cP

|

|

CNSS

|

Committee on National Security Systems

|

|

cP or cP

|

Compiler P, the putative parent of cA

|

|

CP/M

|

Control Program for Microcomputers

|

|

CPU

|

Central Processing Unit

|

|

cT or cT

|

Compiler cT, a “trusted” compiler (see

section 4.3)

|

|

DDC

|

Diverse Double-Compiling

|

|

DoD

|

Department of Defense (U.S.)

|

|

DOS

|

Disk Operating System

|

|

DRAM

|

Dynamic Random Access Memory

|

|

e1

|

Environment that produces stage1

|

|

e2

|

Environment that produces stage2

|

|

eA

|

Environment that putatively produced cA

|

|

eArun

|

Environment that cA and stage2 are intended to run

in

|

|

EBCDIC

|

Extended Binary Coded Decimal Interchange Code

|

|

ECC

|

Error Correcting Code(s)

|

|

eP

|

Environment that putatively produced cP

|

|

FOL

|

First-Order Logic (with equality), aka first-order predicate

logic

|

|

FS

|

Free Software

|

|

FLOSS

|

Free-Libre/Open Source Software

|

|

FOSS

|

Free/Open Source Software

|

|

FSF

|

Free Software Foundation

|

|

GAO

|

General Accounting Office (U.S.)

|

|

GCC

|

GNU Compiler Collection (formerly the GNU C compiler)

|

|

GNU

|

GNU’s not Unix

|

|

GPL

|

General Public License

|

|

HOL

|

Higher Order Logic

|

|

IC

|

Integrated Circuit

|

|

IDA

|

Institute for Defense Analyses

|

|

iff

|

if and only if

|

|

I/O

|

input/output

|

|

IP

|

Intellectual Property

|

|

ISO

|

International Organization for Standardization (sic)

|

|

ITSD

|

Information Technology and Systems Division

|

|

MDA

|

Missile Defense Agency (U.S.); formerly named SDIO

|

|

MS-DOS

|

Microsoft Disk Operating System (MS-DOS)

|

|

NEL

|

Newline (#x85), used in OS/360

|

|

NIST

|

National Institute of Science and Technology (U.S.)

|

|

OpenBSD

|

Open Berkeley Software Distribution

|

|

OS/360

|

IBM System/390 operating-system

|

|

OSI

|

Open Source Initiative

|

|

OSS

|

Open Source Software

|

|

OSS/FS

|

Open Source Software/Free Software

|

|

PITAC

|

President’s Information Technology Advisory Committee

|

|

ProDOS

|

Professional Disk Operating System

|

|

PVS

|

Prototype Verification System

|

|

QED

|

Quod erat demonstrandum (“which was to be

demonstrated”)

|

|

RepRap

|

Replicating Rapid-prototyper

|

|

S-expression

|

Symbolic expression

|

|

sA or sA

|

putative source code of cA

|

|

SAMATE

|

Software Assurance Metrics And Tool Evaluation (NIST

project)

|

|

SDIO

|

Strategic Defense Initiative Organization (U.S.); later renamed

to the Missile Defense Agency (MDA)

|

|

SHA

|

Secure Hash Algorithm

|

|

sic

|

spelling is correct

|

|

sP or sP

|

putative source code of cP

|

|

SQL

|

Structured Query Language

|

|

STEM

|

Scanning Transmission Electron Microscope

|

|

tcc or TinyCC

|

Tiny C Compiler

|

|

UCS

|

Universal Character Set

|

|

URL

|

Uniform Resource Locator

|

|

U.S.

|

United States

|

|

UTF-8

|

8-bit UCS/Unicode Transformation Format

|

|

UTF-16

|

16-bit UCS/Unicode Transformation Format

|

|

VHDL

|

VHSIC hardware description language

|

|

VHSIC

|

Very High Speed Integrated Circuit

|

|

|

Arbitrary FOL formula

|

|

|

Arbitrary FOL term number x

|

See appendix E for key definitions.

Abstract

Fully Countering Trusting Trust through Diverse

Double-Compiling

David A. Wheeler, PhD

George Mason University, 2009

Dissertation Directors: Dr. Daniel A. Menascé and Dr. Ravi

Sandhu

An Air Force evaluation of Multics, and Ken Thompson’s

Turing award lecture (“Reflections on Trusting Trust”),

showed that compilers can be subverted to insert malicious Trojan

horses into critical software, including themselves. If this

“trusting trust” attack goes undetected, even complete

analysis of a system’s source code will not find the

malicious code that is running. Previously-known countermeasures

have been grossly inadequate. If this attack cannot be countered,

attackers can quietly subvert entire classes of computer systems,

gaining complete control over financial, infrastructure, military,

and/or business systems worldwide. This dissertation’s thesis

is that the trusting trust attack can be detected and effectively

countered using the “Diverse Double-Compiling” (DDC)

technique, as demonstrated by (1) a formal proof that DDC can

determine if source code and generated executable code correspond,

(2) a demonstration of DDC with four compilers (a small C compiler,

a small Lisp compiler, a small maliciously corrupted Lisp compiler,

and a large industrial-strength C compiler, GCC), and (3) a

description of approaches for applying DDC in various real-world

scenarios. In the DDC technique, source code is compiled twice: the

source code of the compiler’s parent is compiled using a

trusted compiler, and then the putative compiler source code is

compiled using the result of the first compilation. If the DDC

result is bit-for-bit identical with the original

compiler-under-test’s executable, and certain other

assumptions hold, then the compiler-under-test’s executable

corresponds with its putative source code.

1 Introduction

Many software security evaluations examine source code, under

the assumption that a program’s source code accurately

represents the executable actually run by the computer. Naïve developers presume

that this can be assured simply by recompiling the source code to

see if the same executable is produced. Unfortunately, the

“trusting trust” attack can falsify this

presumption.

For purposes of this dissertation, an executable that does not

correspond to its putative source code is corrupted. If a corrupted executable was

intentionally created, we can call it a maliciously

corrupted executable. The trusting trust attack occurs

when an attacker attempts to disseminate a compiler executable that

produces corrupted executables, at least one of those produced

corrupted executables is a corrupted compiler, and the attacker

attempts to make this situation self-perpetuating. The attacker may

use this attack to insert other Trojan horse(s) (software that

appears to the user to perform a desirable function but facilitates

unauthorized access into the user’s computer system).

Information about the trusting trust attack was first published

in [Karger1974]; it became widely known through [Thompson1984].

Unfortunately, there has been no practical way to fully detect or

counter the trusting trust attack, because repeated in-depth review

of industrial compilers’ executable code is impractical.

For source code evaluations to be strongly credible, there must

be a way to justify that the source code being examined accurately

represents what is being executed—yet the trusting trust

attack subverts that very claim. Internet Security System’s

David Maynor argues that the risk of attacks on compilation

processes is increasing [Maynor2004] [Maynor2005]. Karger and

Schell noted that the trusting trust attack was still a problem in

2000 [Karger2000], and some technologists doubt that computer-based

systems can ever be secure because of the existence of this attack

[Gauis2000]. Anderson et al. argue that the general risk of

subversion is increasing [Anderson2004].

Recently, in several mailing lists and blogs, a technique to

detect such attacks has been briefly described, which uses a second

(diverse) “trusted” compiler (as will be defined in

section 4.3) and two compilation stages. This dissertation terms

the technique “diverse double-compiling” (DDC). In the

DDC technique, the source code of the compiler’s parent is

compiled using a trusted compiler, and then the putative compiler

source code is compiled using the result of the first compilation

(chapter 4 further explains this). If the DDC result is bit-for-bit

identical with the original compiler-under-test’s executable,

and certain other assumptions hold, then the

compiler-under-test’s executable corresponds with its

putative source code (chapter 5 justifies this claim). Before this

work began, there had been no examination of DDC in detail which

identified its assumptions, proved its correctness or

effectiveness, or discussed practical issues in applying it. There

had also not been any public demonstration of DDC.

This dissertation’s thesis is that the trusting trust

attack can be detected and effectively countered using the

“Diverse Double-Compiling” (DDC) technique, as

demonstrated by (1) a formal proof that DDC can determine if source

code and generated executable code correspond, (2) a demonstration

of DDC with four compilers (a small C compiler, a small Lisp

compiler, a small maliciously corrupted Lisp compiler, and a large

industrial-strength C compiler, GCC), and (3) a description of

approaches for applying DDC in various real-world scenarios.

This dissertation provides background and a description of the

threat, followed by an informal description of DDC. This is

followed by a formal proof of DDC, information on how diversity (a

key requirement of DDC) can be increased, demonstrations of DDC,

and information on how to overcome practical challenges in applying

DDC. The dissertation closes with conclusions and ramifications.

Appendices have some additional detail. Further details, including

materials sufficient to reproduce the experiments, are available

at:

http://www.dwheeler.com/trusting-trust/

This dissertation follows the guidelines of [Bailey1996] to

enhance readability. In addition, this dissertation uses logical

(British) quoting conventions; quotes do not enclose punctuation

unless they are part of the quote [Ritter2002]. Including

extraneous characters in a quotation can be grossly misleading,

especially in computer-related material [Raymond2003, chapter

5].

2

Background and related work

This chapter provides background and related work. It begins

with a discussion of the initial revelation of the trusting trust

attack by Karger, Schell, and Thompson, including a brief

description of “obvious” yet inadequate solutions. The

next sections discuss work on corrupted or subverted compilers, the

compiler bootstrap test, general work on analyzing software, and

general approaches for using diversity to improve security. This is

followed by evidence that software subversion is a real problem,

not just a theoretical concern. This chapter concludes by

discussing the DDC paper published by the Annual Computer Security

Applications Conference (ACSAC) [Wheeler2005] and the improvements

to DDC that have been made since that time.

2.1 Initial revelation: Karger, Schell, and Thompson

Karger and Schell provided the first public description of the

problem that compiler executables can insert malicious code into

themselves. They noted in their examination of Multics

vulnerabilities that a “penetrator could insert a trap door

into the... compiler... [and] since the PL/I compiler is itself

written in PL/I, the trap door can maintain itself, even when the

compiler is recompiled. Compiler trap doors are significantly more

complex than the other trap doors... However, they are quite

practical to implement” [Karger1974].

Ken Thompson widely publicized this problem in his 1984 Turing

Award presentation (“Reflections on Trusting Trust”),

clearly explaining it and demonstrating that this was both a

practical and dangerous attack. He described how to modify the Unix

C compiler to inject a Trojan horse, in this case to modify the

operating system login program to surreptitiously give him root

access. He also added code so that the compiler would inject a

Trojan Horse when compiling itself, so the compiler became a

“self-reproducing program that inserts both Trojan horses

into the compiler”. Once this is done, the attacks could be

removed from the source code. At that point no source code

examination—even of the compiler—would reveal the

existence of the Trojan horses, yet the attacks could persist

through recompilations and cross-compilations of the compiler. He

then stated that “No amount of source-level verification or

scrutiny will protect you from using untrusted code... I could have

picked on any program-handling program such as an assembler, a

loader, or even hardware microcode. As the level of program gets

lower, these defects will be harder and harder to detect”

[Thompson1984]. Thompson’s demonstration also subverted the

disassembler, hiding the attack from disassembly. Thompson

implemented this attack in the C compiler and (as a demonstration)

successfully subverted another Bell Labs group, the attack was

never detected.

Thompson later gave more details about his demonstration,

including assurances that the maliciously corrupted compiler was

never released outside Bell Labs [Thornburg2000].

Obviously, this attack invalidates security evaluations based on

source code review, and recompilation of source code using a

potentially-corrupted compiler does not eliminate the risk. Some

simple approaches appear to solve the problem at first glance, yet

fail to do so or have significant weaknesses:

-

Compiler executables could be manually compared with their

source code. This is impractical given compilers’ large

sizes, complexity, and rate of change.

-

Such comparison could be automated, but optimizing compilers

make such comparisons extremely difficult, compiler changes make

keeping such tools up-to-date difficult, and the tool’s

complexity would be similar to a compiler’s.

-

A second compiler could compile the source code, and then the

executables could be compared automatically to argue semantic

equivalence. There is some work in determining the semantic

equivalence of two different executables [Sabin2004], but this is

very difficult to do in practice.

-

Receivers could require that they only receive source code and

then recompile everything themselves. This fails if the

receiver’s compiler is already maliciously corrupted; thus,

it simply moves the attack location. An attacker could also insert

the attack into the compiler’s source; if the receiver

accepts it (due to lack of diligence or conspiracy), the attacker

could remove the evidence in a later version of the compiler (as

further discussed in section 8.4).

-

Programs can be written in interpreted languages. But eventually

an interpreter must be implemented by machine code, so this simply

moves the attack location.

2.2 Other work on corrupted compilers

Some previous papers outline approaches for countering corrupted

compilers, though their approaches have significant weaknesses.

Draper [Draper1984] recommends screening out maliciously corrupted

compilers by writing a “paraphrase” compiler (possibly

with a few dummy statements) or a different compiler executable,

compiling once to remove the Trojan horse, and then compiling a

second time to produce a Trojan horse-free compiler. This idea is

expanded upon by McDermott [McDermott1988], who notes that the

alternative compiler could be a reduced-function compiler or one

with large amounts of code unrelated to compilation. Lee’s

“approach #2” describes most of the basic process of

diverse double-compiling, but implies that the results might not be

bit-for-bit identical [Lee2000]. Luzar makes a similar point as

Lee, describing how to rebuild a system from scratch using a

different trusted compiler but not noting that the final result

should be bit-for-bit identical if other factors are carefully

controlled [Luzar2003].

None of these papers note that it is possible to produce a

result that is bit-for-bit identical to the original compiler

executable. This is a significant advantage of diverse

double-compiling (DDC), because determining if two different

executables are “functionally equivalent” is extremely

difficult, while determining if two

executables are bit-for-bit identical is extremely easy. These

previous approaches require each defender to recompile their

compiler themselves before using it; in contrast, DDC can be used

as an after-the-fact vetting process by multiple third parties,

without requiring a significant change in compiler delivery or

installation processes, and without requiring that all compiler

users receive the compiler source code. All of these previous

approaches simply move the potential vulnerability somewhere else

(e.g., to the process using the “paraphrase” compiler).

In contrast, an attacker who wishes to avoid detection by DDC must

corrupt both the original compiler and every

application of DDC to that executable, so each application of DDC

can further build confidence that a specific executable corresponds

with its putative source code. Also, none of these papers

demonstrate their technique.

Magdsick discusses using different versions of a compiler, and

different compiler platforms such as central processing unit (CPU)

and operating system, to check executables. However, Magdsick

presumes that the compiler itself will be the same base compiler

(though possibly a different version). He does note the value of

recompiling “everything” to check it [Magdsick2003].

Anderson notes that cross-compilation does not help if the attack

is in the compiler [Anderson2003]. Mohring argues for the use of

recompilation by GCC to check other components, presuming that the

GCC executables themselves in some environments would be pristine

[Mohring2004]. He makes no notice that all GCC executables used

might be maliciously corrupted, or of the importance of diversity

in compiler implementation. In his approach different compiler

versions may be used, so outputs would be “similar” but

not identical; this leaves the difficult problem of comparing

executables for “exact equivalence” unresolved.

A great deal of effort has been spent to develop proofs of

correctness for compilers, either of the compiler itself and/or its

generated results [Dave2003] [Stringer-Calvert1998] [Bellovin1982].

This is quite difficult even for simple languages, though there has

been progress. [Leinenbach2005] discusses progress in verifying a

subset C compiler using Isabelle/Higher Order Logic (HOL).

“Compcert” is a compiler that generates PowerPC

assembly code from Clight (a large subset of the C programming

language); this compiler is primarily written using the

specification language of the Coq proof assistant, and its

correctness (that the generated assembly code is semantically

equivalent to its source program) has been entirely proved within

the Coq proof assistant [Leroy2006] [Blazy2006] [Leroy2008]

[Leroy2009]. [Goerigk1997] requires formal specifications and

correspondence proofs, along with double-checking of resulting

transformations with the formal specifications. It does briefly

note that “if an independent (whatever that is)

implementation of the specification will generate an equal

bootstrapping result, this fact might perhaps increase confidence.

Note however, that, in particular in the area of security... We

want to guarantee the correctness of the generated code, e.g.,

preventing criminal attacks” [Goerigk1997, 17]. However, it

does not explain what independence would mean, nor what kind of

confidence this equality would provide. [Goerigk1999] specifically

focuses on countering Trojan horses in compilers, through formal

verification techniques, but again this requires having formal

specifications and performing formal correspondence proofs. Goerigk

recommends “a posteriori code inspection based on syntactic

code comparison” to counter the trusting trust attack, but

such inspection is very labor-intensive on industrial-scale

compilers that implement significant optimizations. DDC can be

dramatically strengthened by having formal specifications and

proofs of compilers (which can then be used as the trusted

compiler), but DDC does not require them. Indeed, DDC and formal

proofs of compilers can be used in a complementary way: A

formally-proved compiler may omit many useful optimizations (as

they can be difficult or time-consuming to prove), but it can still

be used as the DDC “trusted compiler” to gain

confidence in another (production-ready) compiler.

Spinellis argues that “Thompson showed us that one cannot

trust an application’s security policy by examining its

source code... The recent Xbox attack demonstrated that one cannot

trust a platform’s security policy if the applications

running on it cannot be trusted” [Spinellis2003]. It is worth

noting that the literature for change detection (such as [Kim1994]

and [Forrest1994]) and intrusion detection do not easily address

this problem, because a compiler is expected to accept

source code and generate object code.

Faigon’s “Constrained Random Testing” process

detects compiler defects by creating many random test programs,

compiling them with a compiler-under-test and a reference compiler,

and detecting if running them produces different results [Faigon].

Faigon’s approach may be useful for finding some compiler

errors, but it is extremely unlikely to find maliciously corrupted

compilers.

2.3 Compiler bootstrap

test

A common test for errors used by many compilers (including GCC)

is the so-called “compiler bootstrap test”. Goerigk

formally describes this test, crediting Niklaus Wirth’s 1986

book Compilerbau as proposing this test for detecting errors

in compilers [Goerigk1999]. In this test, if c(s,b) is the result

of compiling source s using compiler executable b, and m is some other compiler (the

“bootstrap” compiler), then:

If m0 and s are both correct and deterministic,

m is correct,

m0=c(s,m),

m1=c(s,m0), m2=c(s,m1), all compilations terminate, and if the

underlying hardware works correctly, then m1=m2.

The compiler bootstrap test goes through steps to determine if

m1=m2; if not, there is a compiler error of some kind. This test

finds many unintentional errors, which is why it is popular. But

[Goerigk1999] points out that this test is insufficient to make

strong claims, in particular, m1 may equal m2 even if m, m0, or s are not

correct. For example, it is trivial to create compiler source code

that passes this test, yet is incorrect, since this test only tests

features used in the compiler itself. More importantly (for

purposes of this dissertation), if m is a maliciously corrupted

compiler, a compilation process can pass this test yet produce a

maliciously corrupted compiler m2. Note that the compiler bootstrap

test does not consider the possibility of using two

different bootstrap compilers (m and m′) and later comparing

their different compiler results (m2 and m2′) to see if they

produce the same (bit-for-bit) result. Therefore, the DDC technique

is not the same as the compiler bootstrap test. However, DDC

does have many of the same preconditions as the compiler

bootstrap test. Since the compiler bootstrap test is popular, many

DDC preconditions are already met by typical industrial compilers,

making DDC easier to apply to typical industrial compilers.

2.4 Analyzing software

All programs can be analyzed to find intentionally-inserted or

unintentional security issues (aka vulnerabilities). These

techniques can be broadly divided into static analysis (which

examines a static representation of the program, such as source

code or executable, without executing it) and dynamic analysis

(which examines what the program does while it is executing).

Formal methods, which are techniques that use mathematics to prove

programs or program models are correct, can be considered a

specific kind of static analysis technique.

Since compilers are programs, these general analysis techniques

(both static and dynamic) that are not specific to compilers can be

used on compilers as well.

2.4.1 Static

analysis

Static analysis techniques examine programs (their source code,

executable, or both) without executing them. Both programs and

humans can perform static analysis.

There are many static analysis programs (aka tools) available;

many are focused on identifying security vulnerabilities in

software. The National Institute of Science and Technology (NIST)

Software Assurance Metrics And Tool Evaluation (SAMATE) project

(http://samate.nist.gov) is

“developing methods to enable software tool evaluations,

measuring the effectiveness of tools and techniques, and

identifying gaps in tools and methods”. SAMATE has collected

a long list of static analysis programs for finding security

vulnerabilities by examining source code or executable code. There

are also a number of published reports comparing various static

analysis tools, such as [Zitser2004], [Forristal2005],

[Kratkiewicz2005], and [Michaud2006]. A draft functional

specification for source code analysis tools has been developed

[Kass2006], proposing a set of defects that such tools would be

required to find and the code complexity that they must be able to

handle while detecting them.

Although [Kass2006] briefly notes that source code analysis

tools might happen to find malicious trap doors, many documents on

static analysis focus on finding unintentional errors, not

maliciously-implanted vulnerabilities. [Kass2006] specifies a

specific set of security-relevant errors that have been made many

times in real programs, and limits the required depth of the

analysis (to make analysis time and reporting manageable).

[Chou2006] also notes that in practice, static analyzers give up on

error classes that are too hard to diagnose. For unintentional

vulnerabilities, this is sensible; unintentional errors that have

commonly occurred in the past are likely to recur (so searching for

them can be very helpful). Unfortunately, these approaches are less

helpful against an adversary who is intentionally inserting

malicious code into a program. An adversary could intentionally

insert one of these common errors, perhaps because they have high

deniability, but ensure that it is so complex that a tool is

unlikely to find it. Alternatively, an adversary could insert code

that is an attack but not in the list of patterns the tools search

for. Indeed, an adversary can repeatedly use static analysis tools

until he or she has verified that the malicious code will

not be detected later by those tools.

Static analysis tools also exist for analyzing executable files,

instead of source code files. Indeed, [Balakrishnan2005] argues

that program analysis should begin with executables instead of

source code, because only the executables are actually run and

source code analysis can be misled. To address this, there are

efforts to compute better higher-level constructs from executable

code, but in the general case this is still a difficult research

area [Linger2006].

[Wysopal] presents a number of heuristics that can be used to

statically detect some application backdoors in executable files.

This includes identifying static variables that “look

like” usernames, passwords, or cryptographic keys, searching

for network application programmer interface (API) calls in

applications where they are unexpected, searching for standard

date/time API calls (which may lead to a time bomb), and so on.

Unfortunately, many malicious programs will not be detected by such

heuristics, and as noted above, attackers can develop malicious

software in ways that specifically avoid detection by the

heuristics of such tools.

Many static analysis tools for executables use the same approach

as many static analysis tools for source code: they search for

specific programs or program fragments known to be problematic. The

most obvious case are virus-checkers; though it is possible to

examine behavior, and some anti-virus programs are increasingly

doing so, historically “anti-virus” programs have a set

of patterns of known viruses, which is constantly updated and used

to search various executables (e.g., in a file or boot record) to

see if these patterns are present [Singh2002] [Lapell2006].

However, as noted in Fred Cohen’s initial work on computer

viruses [Cohen1985], viruses can mutate as they propagate, and it

is not possible to create a pattern listing all-and-only malicious

programs. [Christodorescu2003] attempts to partially counter this;

this paper regards malicious code detection as an

obfuscation-deobfuscation game between malicious code writers and

researchers, and presents an architecture for detecting known

malicious patterns in executables that are hidden by common

obfuscation techniques. Even this more robust architecture does not

work against different malicious patterns, nor against different

obfuscation techniques.

Of course, even if tools cannot find malicious code, detailed

human review can be used at the source or executable level if the

software is critical enough to warrant it. For example, the Open

Berkeley Software Distribution (OpenBSD) operating system source

code is regularly and purposefully examined by a team of people

with the explicit intention of finding and fixing security holes,

and as a result has an excellent security record [Payne2002]. The

Strategic Defense Initiative Organization (SDIO), now named the

Missile Defense Agency (MDA), even developed a set of process

requirements to counter malicious and unintentional

vulnerabilities, emphasizing multi-person knowledge and review

along with configuration management and other safeguards

[SDIO1993].

Unfortunately, the trusting trust attack can render human

reviews moot if there is no technique to counter the attack. The

trusting trust attack immediately renders examination of the source

code inadequate, because the executable code need not correspond to

the source code. Thompson’s attack subverted the symbolic

debugger, so in that case, even human review of the executable

could fail to detect the attack. Thus, human reviews are less

convincing unless the trusting trust attack is itself

countered.

Human review also presumes that other humans examining source

code or executables will be able to detect malicious code. In large

code bases, this can be a challenge simply due to their size and

complexity. In addition, it is possible for an adversary to create

source code that appears to work correctly, yet actually

performs a malevolent action instead. This dissertation uses the

term maliciously misleading code for any source code that is

intentionally designed to look benign, yet creates a vulnerability

(including an attack). The topic of maliciously misleading code is

further discussed in section 8.11.

2.4.2 Dynamic

analysis

It is also possible to use dynamic techniques in an attempt to

detect and/or counter vulnerabilities by examining the activities

of a system, and then halting or examining the system when those

activities are suspicious. A trivial example is execution testing,

where a small set of inputs are provided and the inputs are checked

to see if they are correct. However, dynamic analysis is completely

inadequate for countering the trusting trust attack.

Traditional execution testing is unlikely to counter the

trusting trust attack. Such attacks will only “trigger”

on very specific inputs, as discussed in section 3.2, so even if

the executable is examined in detail, it is extremely unlikely that

traditional execution testing will detect this problem.

Detecting at run-time arbitrary corrupted code in a compiler or

the executable code it generates is very difficult. The fundamental

behavior of a corrupted compiler – that it accepts source

code and generates an executable – is no different from a

uncorrupted one. Similarly, any malicious code a compiler inserts

into other programs can often be made to behave normally in most

cases. For example, a login program with a trap door (a hidden

username and/or password) has the same general behavior: It decides

if a user may log in and what privileges to apply. Indeed, it may

act completely correctly as long as the hidden username and/or

password are not used.

In theory, continuous comparison of an executable’s

behavior at run-time to its source code could detect differences

between the executable and source code. Unfortunately, this would

need to be done all the time, draining performance. Even worse,

tools to do this comparison, given modern compilers producing

highly optimized code, would be far more complex than a compiler,

and would themselves be vulnerable to attack.

Given an extremely broad definition of “system”, the

use of software configuration management tools and change detection

tools like Tripwire [Kim1994] could be considered dynamic

techniques for countering malicious software. Both enable detection

of changes in the behavior of a larger system. Certainly a

configuration management system could be used to record changes

made to compiler source, and then used to enable reviewers to

examine just the differences. But again, such review presupposes

that any vulnerability in an executable could be revealed by

analyzing its source code, a presupposition the trusting trust

attack subverts.

A broader problem is that once code is running, some

programs must be trusted, and at least some of that code will

almost certainly have been generated by a compiler. Any program

that attempts to monitor execution might itself be subverted, just

as Thompson subverted the symbolic debugger, unless there is a

technique to prevent it. In any case, it would be better to detect

and counter malicious code before it executed, instead of

trying to detect malicious code’s execution while or after it

occurs.

2.5 Diversity

for security

There are a number of papers and articles about employing

diversity to aid computer security, though they generally do not

discuss or examine how to use diversity to counter Trojan horses

inside compilers themselves or the compilation environment.

Geer et al. strongly argue that a monoculture (an absence of

diversity) in computing platforms is a serious security problem

[Geer2003] [Bridis2003], but do not discuss employing compiler

diversity to counter this particular attack.

Forrest et al argue that run-time diversity in general is

beneficial for computer security. In particular, their paper

discusses techniques to vary final executables by

“randomized” transformations affecting compilation,

loading, and/or execution. Their goal was to automatically change

the executable (as seen at run-time) in some random ways sufficient

to make it more difficult to attack. The paper provides a set of

examples, including adding/deleting nonfunctional code, reordering

code, and varying memory layout. They demonstrated the concept

through a compiler that randomized the amount of memory allocated

on a stack frame, and showed that the approach foiled a simple

buffer overflow attack [Forrest1997]. Again, they do not attempt to

counter corrupted compilers.

John Knight and Nancy Leveson performed an experiment with

“N-version programming” and showed that, in their

experiment, “the assumption of independence of errors that is

fundamental to some analyses of N-version programming does not

hold” [Knight1986] [Knight1990]. As will be explained in

section 4.7, this result does not invalidate DDC.

2.6

Subversion of software is a real problem

Subversion of software is not just a theoretical possibility; it

is a current problem. One book on computer crime lists various

kinds of software subversion as attack methods (e.g., trap doors,

Trojan horses, viruses, worms, salamis, and logic bombs)

[Icove1995, 57-58]. CERT has

published a set of case studies of “persons who used

programming techniques to commit malicious acts against their

organizations” [Cappelli2008]. Examples of specific software

subversion or subversion attempts include:

-

Michael Lauffenburger inserted a logic bomb into a program at

defense contractor General Dynamics, his employer. The bomb would

have deleted vital rocket project data in 1991, including much that

was unrecoverable, but another employee stumbled onto it before it

was triggered [AP1991] [Hoffman1991].

-

Timothy Lloyd planted a 6-line logic bomb into the systems of

Omega Engineering, his employer, that went off on July 31, 1996.

This erased all of the company’s contracts and proprietary

software used by their manufacturing tools, resulting in an

estimated $12 million in damages, 80 people permanently losing

their jobs, and the loss of their competitive edge in the

electronics market space. Plant manager Jim Ferguson stated flatly,

“We will never recover”. On February 26, 2002, a judge

sentenced Lloyd to 41 months in prison, three years of probation,

and ordered him to pay more than $2 million in damages to Omega

[Ulsh2000] [Gardian].

-

Roger Duronio worked at UBS PaineWebber’s offices in

Weehawken, N.J., and was with the company for two years as a system

administrator. Apparently dissatisfied with his pay, he installed a

logic bomb to detonate on March 4, 2002, and resigned from the

company. When the logic bomb went off, it caused over 1,000 of

their 1,500 networked computers to begin deleting files. This cost

UBS PaineWebber more than $3 million to assess and repair the

damage, plus an undetermined amount from lost business. Duronio was

sentenced to 97 months in federal prison (the maximum per the U.S.

sentencing guidelines), and ordered to make $3.1 million in

restitution [DoJ2006] [Gaudin2006b]. The attack was only a few

lines of C code, which examined the time to see if it was the

detonation time, and then (if so) executed a shell command to erase

everything [Gaudin2006a].

-

An unnamed developer inside Borland inserted a back door into

the Borland/Inprise Interbase Structured Query Language (SQL)

database server around 1994. This was a “superuser”

account (“politically”) with a known password

(“correct”), which could not be “changed using

normal operational commands, nor [deleted] from existing vulnerable

servers”. Versions released to the public from 1994 through

2001 included this back door. Originally Interbase was a

proprietary program sold by Borland/Inprise. However, it was

released as open source software in July

2000, and less than six months later the open source software

developers discovered the vulnerability [Havrilla2001a]

[Havrilla2001b]. The Firebird project, an alternate open source

software package based on the same Interbase code, was also

affected. Jim Starkey, who launched InterBase but left in 1991

before the back door was added to the software in 1994, stated that

he believed that this back door was not malicious, but simply added

to enable one part of the database software to communicate with

another part [Shankland2001]. However, this code had the hallmarks

of many malicious back doors: It added a special account that was

(1) undocumented, (2) cannot be changed, and (3) gave complete

control to the requester.

-

An unknown attacker attempted to insert a malicious back door in

the Linux kernel in 2003. The two new lines were crafted to

appear legitimate, by using an “=” where a

“==” would be expected. The configuration management

tools immediately identified a discrepancy, and examination of the

changes by the Linux developers quickly determined that it was an

attempted attack [Miller2003] [Andrews2003].

More recently, in 2009 the Win32.Induc virus was discovered in

the wild. This virus attacks Delphi compiler installations,

modifying the compiler itself. Once the compiler is infected, all

programs compiled by that compiler will be infected [Mills2009]

[Feng2009]. Thus, countering subverted compilers is no longer an

academic exercise; attacks on compilers have already occurred.

Many have noted insertion of malicious code into software as an

important risk:

-

Many have noted subversion of software as an issue in electronic

voting machines [Saltman1988] [Kohno2004] [Feldman2006]

[Barr2007].

-

The U.S. Department of Defense (DoD) established a

“software assurance initiative” in 2003 to examine

software assurance issues in defense software, including how to

counter intentionally inserted malicious code [Komaroff2005]. In

2004, the U.S. General Accounting Office (GAO) criticized the DoD,

claiming that the DoD “policies do not fully address the risk

of using foreign suppliers to develop weapon system software...

policies [fail to focus] on insider threats, such as the insertion

of malicious code by software developers...” [GAO2004]. The

U.S. Committee on National Security Systems (CNSS) defines Software

Assurance (SwA) as “the level of confidence that software is

free from vulnerabilities, either intentionally designed into the

software or accidentally inserted at anytime during its lifecycle,

and that the software functions in the intended manner”

[CNSS2006]. Note that intentionally-created vulnerabilities

inserting during software development are specifically included in

this definition.

-

The President’s Information Technology Advisory Committee

(PITAC) found that “Vulnerabilities in software that are

introduced by mistake or poor practices are a serious problem

today. In the future, the Nation may face an even more challenging

problem as adversaries – both foreign and domestic –

become increasingly sophisticated in their ability to insert

malicious code into critical software” [PITAC2005, 9]. The

U.S. National Strategy to Secure Cyberspace reported that a

“spectrum of malicious actors can and do conduct attacks

against our critical information infrastructures. Of primary

concern is the threat of organized cyber attacks capable of causing

debilitating disruption to our Nation’s critical

infrastructures, economy, or national security.... [and could

subvert] our infrastructure with back doors and other means of

access.” [PCIB2003,6]

-

In 2003, China's State Council announced a plan requiring all

government ministries to buy only locally produced software when

upgrading, and to increase use of open source software, in part due

to concerns over “data spyholes installed by foreign

powers” in software they procured for government use

[CNETAsia2003].

In short, as software becomes more pervasive, subversion of it

becomes ever more tempting to powerful individuals and

institutions. Attackers can even buy legitimate software companies,

or build them up, to widely disseminate quality products at a low

price... but with “a ticking time bomb inside”

[Schwartau1994, 304-305].

Not all articles about subversion specifically note the trusting

trust attack as an issue, but as noted earlier, for source code

evaluations to be strongly credible, there must be a way to justify

that the source code being examined accurately represents what is

being executed—yet the trusting trust attack subverts that

very claim. Internet Security System’s David Maynor argues

that the risk of attacks on compilation processes is increasing

[Maynor2004] [Maynor2005]; Karger and Schell noted that the

trusting trust attack was still a problem in 2000 [Karger2000], and

some technologists doubt that computer-based systems can ever be

secure because of the existence of this attack [Gauis2000].

Anderson et al. argue that the general risk of subversion is

increasing [Anderson2004]. Williams argues that the risk from

malicious developers should be taken seriously, and describes a

variety of techniques that malicious programmers can use to insert

and hide attacks in an enterprise Java application

[Williams2009].

2.7 Previous DDC

paper

Initial results from DDC research were published by the Annual

Computer Security Applications Conference (ACSAC) in [Wheeler2005].

This paper was well-received, for example, Bruce Schneier wrote a

glowing review and summary of the paper [Schneier2006], and the

Spring 2006 class “Secure Software Engineering Seminar”

of Dr. James Walden (Northern Kentucky University) included it in

its required reading list.

This dissertation includes the results of [Wheeler2005] and

refines it further:

-

The definition of DDC is generalized to cover the case where the

compiler is not self-regenerating. Instead, a compiler-under-test

may have been generated using a different “parent”

compiler. Self-regeneration (where the putative source code of the

parent and compiler-under-test are the same) is now a special

case.

-

A formal proof of DDC is provided, including a formalization of

DDC assumptions. The earlier paper includes only an informal

justification. The proof covers cases where the environments are

different, including the effect of different text representation

systems.

-

A demonstration of DDC with a known maliciously corrupted

compiler is shown. As expected, DDC detects this case.

-

A demonstration of DDC with an industrial-strength compiler

(GCC) is shown.

-

The discussion on the application of DDC is extended to cover

additional challenges, including its potential application to

hardware.

3 Description of

threat

Thompson describes how to perform the trusting trust attack, but

there are some important characteristics of the attack that are not

immediately obvious from his presentation. This chapter examines

the threat in more detail and introduces terminology to describe

the threat. This terminology will be used later to explain how the

threat is countered. For a more detailed model of this threat, see

[Goerigk2000] and [Goerigk2002] which provide a formal model of the

trusting trust attack.

The following sections describe what might motivate an attacker

to actually perform such an attack, and the mechanisms an attacker

uses that make this attack work (triggers, payloads, and

non-discovery).

3.1 Attacker motivation

Understanding any potential threat involves determining the

benefits to an attacker of an attack, and comparing them to the

attacker’s risks, costs, and difficulties. Although this

trusting trust attack may seem exotic, its large benefits may

outweigh its costs to some attackers.

The potential benefits are immense to a malicious attacker. A

successful attacker can completely control all systems that are

compiled by that executable and that executable’s

descendants, e.g., they can have a known login (e.g., a

“backdoor password”) to gain unlimited privileges on

entire classes of systems. Since detailed source code reviews will

not find the attack, even defenders who have highly valuable

resources and check all source code are vulnerable to this

attack.

For a widely-used compiler, or one used to compile a widely-used

program or operating system, this attack could result in global

control. Control over banking systems, financial markets,

militaries, or governments could be gained with a single attack. An

attacker could possibly acquire enormous funds (by manipulating the

entire financial system), acquire or change extremely sensitive

information, or disable a nation’s critical infrastructure on

command.

An attacker can perform the attack against multiple compilers as

well. Once control is gained over all systems that use one

compiler, trust relationships and network interconnections could be

exploited to ease attacks against other compiler executables. This

would be especially true of a patient and careful attacker; once a

compiler is subverted, it is likely to stay subverted for a long

time, giving an attacker time to use it to launch further

attacks.

An attacker (either an individual or an organization) who

subverted a few of the most widely used compilers of the most

widely-used operating systems could effectively control, directly

or indirectly, almost every computer in existence.

The attack requires knowledge about compilers, effort to create

the attack, and access (gained somehow) to the compiler executable,

but all are achievable. Compiler construction techniques are

standard Computer Science course material. The attack requires the

insertion of relatively small amounts of code, so the attack can be

developed by a single knowledgeable person. Access rights to change

the relevant compiler executables are usually harder to acquire,

but there are clearly some who have such privileges already, and a

determined attacker may be able to acquire such privileges through

a variety of means (including network attack, social engineering,

physical attack, bribery, and betrayal).

The amount of power this attack offers is great, so it is easy

to imagine a single person deciding to perform this attack for

their own ends. Individuals entrusted with compiler development

might succumb to the temptation if they believed they could not be

caught. Today there are many virus writers, showing that many

people are willing to write malicious code even without gaining the

control this attack can provide.

It is true that there are other devastating attacks that

an attacker could perform in the current environment. Many users

routinely download and install massive executables, including large

patches and updates, that could include malicious code, and few

users routinely examine executable machine code or byte code. Few

users examine source code even when they can receive it, and

in many cases users are not legally allowed to examine the source

code. As a result, here are some other potentially-devastating

attacks that could be performed besides the trusting trust

attack:

-

An attacker can find unintentional vulnerabilities in existing

executables, and then write code to exploit them.

-

An attacker could modify or replace a widely-used/important

executable during or after its compilation, but before its release

by its supplier. For example, an attacker might be able to do this

by bribing or extorting a key person in the supplying organization,

by becoming a key person, or by subverting the supplier’s

infrastructure.

-

Even when users only accept source code and compile the source

code themselves, an attacker could insert an intentional attack in

the source code of a widely-used/important program in the hope that

no one will find it later.

-

An attacker with a long-range plan could develop a useful

program specifically so that they can embed or eventually embed an

attack (using the two attacks previously noted). In such cases the

attacker might become a trusted (but not trustworthy) supplier.

However, there is a fundamental difference with the

attacks listed above and the trusting trust attack: there are

known detection techniques for these attacks:

-

Static and dynamic analysis can detect many unintentional

vulnerabilities, because they tend to be caused by common

implementation mistakes. In addition, software designs can reduce

the damage from such mistakes, and some implementation languages

can completely eliminate certain kinds of mistakes. Many documents

discuss how to develop secure software for those trying to do so,

including [Wheeler2003s] and [NDIA2008].

-

If an attacker swaps the expected executable with a malicious

executable, without using a trusting trust attack, the attack can

be discovered by recompiling the source code to see if it produces

the same results (presuming a deterministic compiler is used). Even

if it is not discovered, recompilation of the next version of the

executable will often eliminate the attack if it is not a

“trusting trust” attack.

-

If an attacker inserts an intentional attack or vulnerability in

the source code, this can be revealed by examining the source code

(see section 8.11 for a discussion on attacks which are

intentionally difficult to find in source code).

-

If the user does not fully trust the supplier to perform such

tests, then these tests could be performed by the user (if the user

has the necessary information), or by a third party who is trusted

by the user and supplier (if the supplier is unwilling to give

necessary information to the user, but are willing to give it to

such a third party). If the supplier is unwilling to provide the

necessary information to either the user or a third party, the user

could reasonably conclude that using such suppliers is a higher

risk than using suppliers who are willing to provide this

information, and then take steps based on that conclusion.

In contrast, there has been no known effective detection

technique for the trusting trust attack. Thus, even if all of these

well-known detection techniques were used, users would still

be vulnerable to the trusting trust attack. What is more, the

subversion can persist indefinitely; the longer it remains

undetected, the more difficult it will be to reliably identify the

perpetrator even if it is detected.

Given such extraordinarily large benefits to an attacker, and

the lack of an effective detection mechanism, a highly resourced

organization (such as a government) might decide to undertake it.

Such an organization could supply hundreds of experts, working

together full-time to deploy attacks over a period of decades.

Defending against this scale of attack is far beyond the defensive

abilities of most companies and non-profit organizations who

develop and maintain popular compilers.

In short, this is an attack that can yield complete control over

a vast number of systems, even those systems whose defenders

perform independent source code analysis (e.g., those who have

especially high-value assets), so it is worth defending

against.

3.2

Triggers, payloads, and non-discovery

The trusting trust attack depends on three things: triggers,

payloads, and non-discovery. For purposes of this dissertation, a

“trigger” is a condition determined by an attacker in

which a malicious event is to occur (e.g., when malicious code is

to be inserted into a program). A “payload” is the code

that actually performs the malicious event (e.g., the inserted

malicious code and the code that causes its insertion). The attack

also depends on non-discovery by its victims, that is, it depends

on victims not detecting the attack (before, during, or after it

has been triggered).

For this attack to be valuable, there must be at least two

triggers that can occur during compilation: at least one to cause a

malicious attack directly of value to the attacker (e.g., detecting

compilation of a “login” program so that a Trojan horse

can be inserted into it), and one to propagate attacks into future

versions of the compiler executable.

If a trigger is activated when the attacker does not intend the

trigger to be activated, the probability of detection increases.

However, if a trigger is not activated when the attacker intends it

to be activated, then that particular attack will be disabled. If

all the attacks by the compiler against itself are disabled, then

the attack will no longer propagate; once the compiler is

recompiled, the attacks will disappear. Similarly, if a payload

requires a situation that (through the process of change)

disappears, then the payload will no longer be effective (and its

failure may reveal the attack).

In this dissertation, “fragility” is the

susceptibility of the trusting trust attack to failure, i.e., that

a trigger will activate when the attacker did not wish it to

(risking a revelation of the attack), fail to trigger when the

attacker would wish it to, or that the payload will fail to work as

intended by the attacker. Fragility is unfortunately less helpful

to the defender than it might first appear. An attacker can counter

fragility by simply incorporating many narrowly-defined triggers

and payloads. Even if a change causes one trigger to fail, another

trigger may still fire. By using multiple triggers and payloads, an

attacker can attack multiple points in the compiler and attack

different subsystems as final targets (e.g., the login system, the

networking interface, and so on). Thus, even if some attacks fail

over time, there may be enough vulnerabilities in the resulting

system to allow attackers to re-enter and re-insert new triggers

and payloads into a malicious compiler. Even if a compiler

misbehaves from malfunctioning malware, the results could appear to

be a mysterious compiler defect; if programmers “code

around” the problem, the attack will stay undetected.

Since attackers do not want their malicious code to be

discovered, they may limit the number of triggers/payloads they

insert and the number of attacked compilers. In particular,

attackers may tend to attack only “important” compilers

(e.g., compilers that are widely-used or used for high-asset

projects), since each compiler they attack (initially or to add new

triggers and payloads) increases the risk of discovery. However,

since these attacks can allow an attacker to deeply penetrate

systems generated with the compiler, maliciously corrupted

compilers make it easier for an attacker to re-enter a previously

penetrated development environment to refresh an executable with

new triggers and payloads. Thus, once a compiler has been

subverted, it may be difficult to undo the damage without a process

for ensuring that there are no attacks left.

The text above might give the impression that only the compiler

itself, as usually interpreted, can influence results (or how they

are run), yet this is obviously not true. Assemblers and loaders

are excellent places to place a trigger (the popular GCC C compiler

actually generates assembly language as text and then invokes an

assembler). An attacker could place the trigger mechanism in the

compiler’s supporting infrastructure such as the operating

system kernel, libraries, or privileged programs.

4 Informal description of Diverse Double-Compiling (DDC)

The idea of diverse double-compiling (DDC) was first created and

posted by Henry Spencer in 1998 [Spencer1998] in a very short

posting. It was inspired by McKeeman et al’s exercise for

detecting compiler defects [McKeeman1970] [Spencer2005]. Since this

time, this idea has been posted in several places, typically with

very short descriptions [Mohring2004] [Libra2004] [Buck2004]. This

chapter describes the graphical notation for describing DDC that is

used in this dissertation. This is followed by a brief informal

description of DDC, an informal discussion of its assumptions, a

clarification that DDC does not require that arbitrary

different compilers produce the same executable output given

the same input, and a discussion of a common special case:

Self-parenting compilers. This chapter closes by answering some

questions, including: Why not always use the trusted

compiler, and why is this different from N-version programming?

4.1

Terminology and notation

This dissertation focuses on compilers. For purposes of this

dissertation, compilers execute in some environment, receiving as

input source code as well as other input from the

environment, and producing a result termed an executable. A

compiler is, itself, an executable.

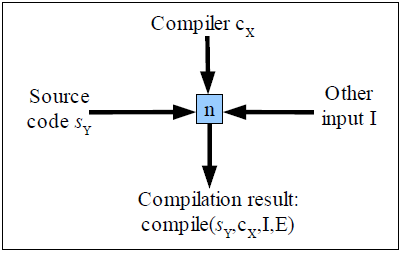

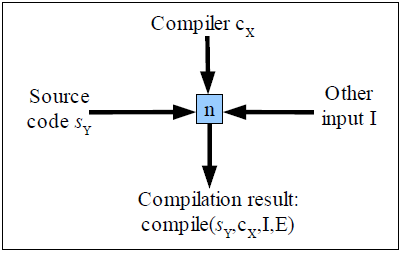

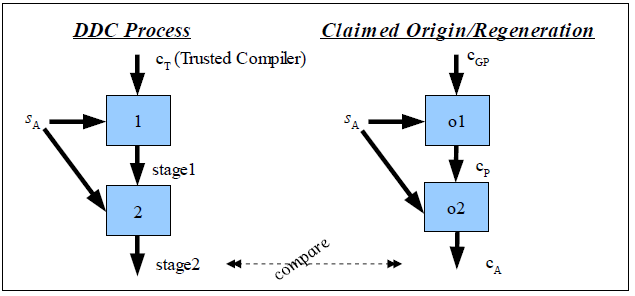

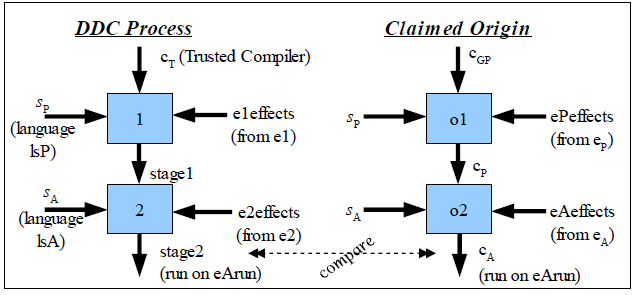

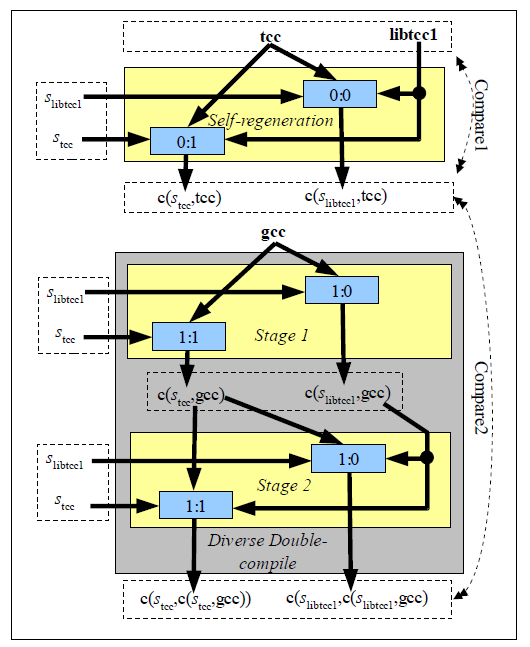

Figure 1: Illustration of graphical

notation

Figure 1 illustrates the notation used in this dissertation. A

shaded box shows a compilation step, which executes a compiler

(input from the top), processing source code (input from the left),

and uses other input (input from the right), all to produce an

executable (output exiting down). To distinguish the different

steps, each compilation step will be given a unique name (shown

here as “n”). Source code that is purported to be the

source code for the executable Y is notated as

sY. The result of a compilation step using

compiler X, source code sY, other input I (e.g.,

run-time libraries, random number results, and thread schedule),

and environment E is an executable, notated here as

compile(sY, cX, I, E).

Where the environment can be determined from context (e.g., it is

all the same) that parameter is omitted; where that is true and any

other input (if relevant) can be inferred, both are omitted

yielding the notation

compile(sY, cX). In some cases,

this will be further abbreviated as

c(sY, cX).

The widely-used “T diagram” (aka

“Bratman”) notation is not used in this dissertation.

T diagrams were originally created by Bratman [Bratman1961],

and later greatly extended and formalized by Earley and Sturgis

[Earley1970]. T diagrams can be very helpful when discussing

certain kinds of bootstrapping approaches. However, they are not a

universally perfect notation, and this dissertation intentionally

uses a different notation because the weaknesses of T diagrams

make DDC unnecessarily difficult to describe:

-

T diagrams combining multiple compilation steps can be very

confusing [Mogensen2007, 219]. This is a serious problem when

representing DDC, since DDC is fundamentally about multiple

compilation steps.

-

T diagrams quickly grow in width when multiple steps are

involved; since paper is usually taller than it is wide, this can

make complex situations more difficult to represent on the printed

page. Again, applying DDC involves multiple steps.

-

T diagrams do not handle multiple sub-components well

(e.g., a library embedded in a compiler). The notation can be

“fudged” to do this (see [Early1970, 609]) but the

resulting graphic is excessively complex. Again, compilation of

real compilers using DDC often involves handling multiple

sub-components, making this weakness more important.

-

T diagrams create unnecessary clutter when applied to DDC.

In a T diagram, every compiler source code and compiler

executable, as well as their executions, are represented by a T.

This creates unnecessary visual clutter, making it difficult to see

what is executed and what is not.

Niklaus Wirth abandoned T diagrams in his 1996 book on

compilers, without even mentioning them [Wirth1996], so clearly

T diagrams are not absolutely required when discussing

compiler bootstrapping. The notation of this dissertation uses a

single, simple box for each execution of a compiler, instead of a

trio of T shaped figures. As DDC application becomes complex,

this simplification matters.

4.2

Informal description of DDC

In brief, to perform DDC, source code must be compiled twice.

First, use a separate “trusted” compiler to compile the

source code of the “parent” of the compiler-under-test.

Then, run that resulting executable to compile the purported source

code of the compiler-under-test. Then, check if the final result is

exactly identical to the original compiler executable (e.g.,

bit-for-bit equality) using some trusted means. If it is, then the

purported source code and executable of the compiler-under-test

correspond, given some assumptions to be discussed later.

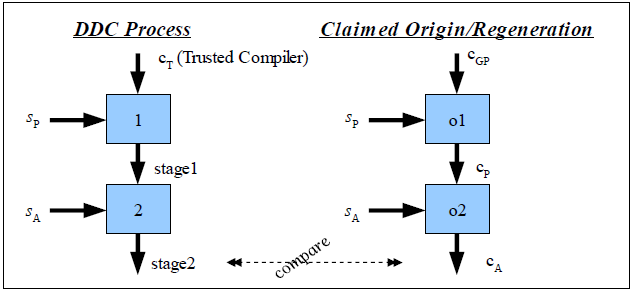

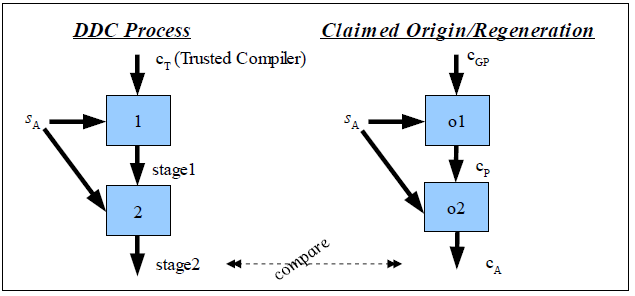

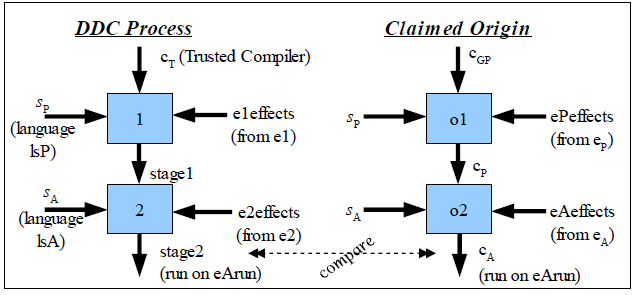

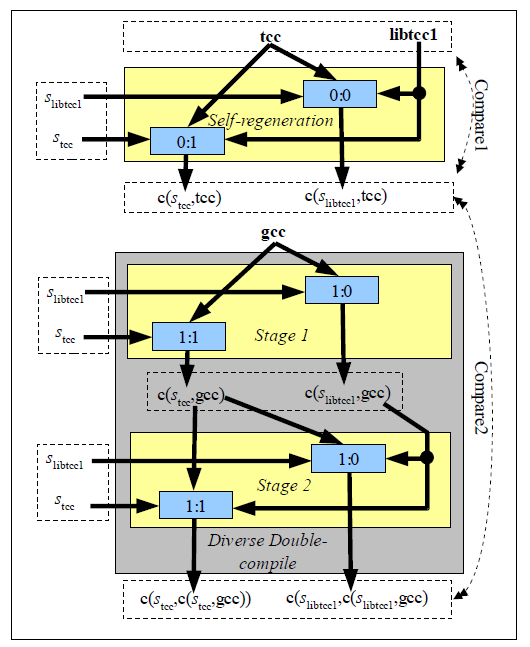

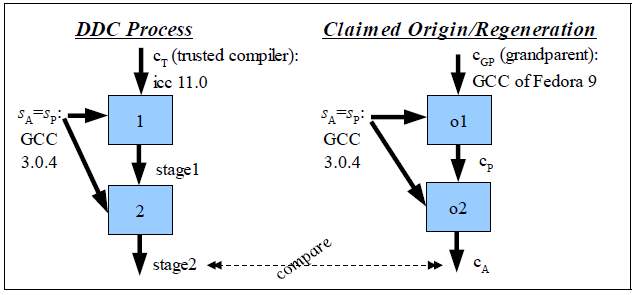

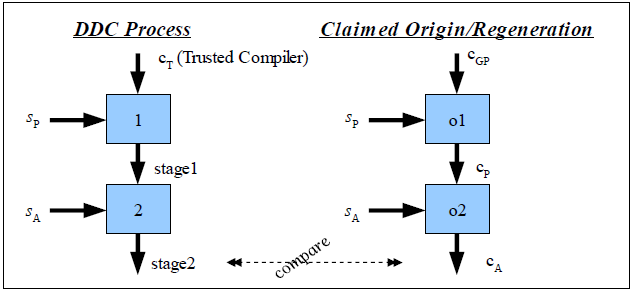

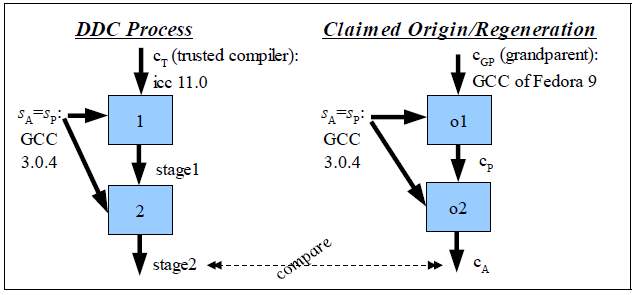

Figure 2: Informal graphical representation of DDC

Figure 2 presents an informal, simplified graphical

representation of DDC, along with the claimed origin of the

compiler-under-test (this claimed original process can be

re-executed as a check for self-regeneration). The dashed line

labeled “compare” is a comparison for exact equality.

This figure uses the following symbols:

-

cA: Executable of the compiler-under-test, which may

be corrupt (maliciously corrupted compilers are by definition

corrupt).

-

sA: Purported source code of compiler

cA. Our goal is determine if cA and

sA correspond.

-

cP: Executable of the compiler that is purported to

have generated cA (it is the purported

“parent” of cA).

-

sP: Purported source code of parent

cP. Often a variant/older version of

sA.

-

cT: Executable of a “trusted” compiler,

which must be able to compile sP.. The exact

meaning of “trusted” will be explained later.

-

1, 2, o1, o2: Stage identifiers. Each stage executes a

compiler.

-

stage1, stage2: The outputs of the DDC stages. Stage1 is a

function of cT and sP, and can be

represented as c(sP, cT) where

“c” means “compile”. Similarly, stage2 can

be represented as c(sA, stage1) or

c(sA, c(sP,

cT)).

The right-hand-side shows the process that purportedly generated

the compiler-under-test executable cA in the first

place. The right-hand-side shows the DDC process. The process

graphs are very similar, so it should not be surprising that the

results should be identical. This dissertation formally proves this

(given certain conditions) and demonstrates that this actually

occurs with real-world compilers.

Before performing DDC itself, it is wise to perform a

regeneration check, which checks to see if we can regenerate

cA using exactly the same process that was supposedly

used to create it originally. Since

cA was supposed to have been created this way in the

first place, regeneration should produce the same result. In

practice, the author has found that this is often not the case. For

example, many organizations’ configuration control systems do

not record all the information necessary to accurately regenerate a

compiled executable, and the ability to perform regeneration is

necessary for the DDC process. In such cases, regeneration acts

like the control of an experiment; it detects when we do not have

proper control over all the relevant inputs or environment.

Corrupted compilers can also pass the regeneration test, so by

itself the regeneration test is not sufficient to reliably detect

corrupted compilers.

We then perform DDC by compiling twice. These two compilation

steps are the origin of this technique’s name: we compile

twice, the first time using a different (diverse) trusted compiler.